MMgc is the Tamarin (née Macromedia) garbage collector, a memory management library that has been built as part of the AVM2/Tamarin effort. It is a static library that is linked into the Flash Player but kept separate, and can be incorporated into other programs.

Using MMgc

Managed vs. Unmanaged Memory

MMgc is not only a garbage collector, but a general-purpose memory manager. The Flash Player uses it for nearly all memory allocations.

MMgc can handle both managed and unmanaged memory.

Managed memory is memory that is reclaimed automatically by the garbage collector. The garbage collector is "managing" it, detecting when the memory is no longer reachable from anywhere in the application and reclaiming it at that time. In MMgc, you get managed memory by subclassing GCObject/GCFinalizedObject/RCObject, or by calling GC::Alloc.

Unmanaged memory is everything else. This is C++ memory management as you're accustomed to it. Memory can be allocated with the new operator, and must be explicitly deleted in your C++ code at some later time using the delete operator.

Another way to think about it:

- Unmanaged memory is C++ operators

newanddelete - Managed memory is C++ operator

new, with optionaldelete

MMgc contains a page allocator called GCHeap, which allocates large blocks (megabytes) of memory from the system and doles out 4KB pages to the unmanaged memory allocator (FixedMalloc) and the managed memory allocator (GC).

MMgc namespace

The MMgc library is in the C++ namespace MMgc.

You can qualify references to classes in the library; for example: MMgc::GC, MMgc::GCFinalizedObject.

Alternately, you can open the MMgc namespace in your C++ source so that you can refer to the objects more concisely:

using namespace MMgc; ... GC* gc = GC::GetGC(this); GCObject* gcObject;

GC class

The class MMgc::GC is the main class of the GC. It represents a full, self-contained instance of the garbage collector.

It may be multiply instantiated; you may have multiple instances of the garbage collector running at once. Each instance manages its own set of objects; objects are not allowed to reference objects in other GC instances.

The GC typically is constructed early in your program's initialization, and then passed to operations like operator new for allocating GC objects.

There are a few methods that you may need to call directly, such as Alloc and Free.

GC::Alloc, GC::Free

The Alloc and Free methods are garbage-collected analogs for malloc and free. Memory allocated with Alloc doesn't need to be explicitly freed, although it can be freed with Free if it is known that there are no other references to it.

Alloc is often used to allocate arrays and other objects of variable size that contain GC pointers or are otherwise desirable to have in managed memory.

These flags may be passed to Alloc to control the allocation type.

/**

* flags to be passed as second argument to alloc

*/

enum AllocFlags

{

kZero=1,

kContainsPointers=2,

kFinalize=4,

kRCObject=8

};

kZero zeros out the memory. Otherwise, the memory contains undefined values.

kContainsPointers indicates to the GC that the memory will contain pointers to other GC objects, and thus needs to be scanned by the GC's mark phase. If you know for certain that the objects will not contain GC pointers, leave this flag off; it will make the mark phase faster by excluding your object.

kFinalize and kRCObject are used internally by the GC; you should not need to set them in your user code.

GC::GetGC

Given the pointer to any GCObject, it is possible to get a pointer to the GC object that allocated it.

void GCObject::Method() {

GC* gc = MMgc::GC::GetGC(this);

...

}

This practice should be used sparingly, but is sometimes useful.

Base Classes

GCObject

A basic garbage collected object.

- A GCObject is allocated with parameterized operator new, passing the MMgc::GC object:

class MyObject : public MMgc::GCObject { ... };

MyObject* myObject = new (gc) MyObject();

- Any pointers to a GCObject from unmanaged memory require the unmanaged object to be a GCRoot.

class MyUnmanagedObject : public MMgc::GCRoot {

MyObject *object;

};

- Any pointers to a GCObject from managed memory require a DWB write barrier macro.

class MyOtherManagedObject : public MMgc::GCObject {

DWB(MyObject*) object;

};

- Now for the good part... there is no need to delete instances of MyObject, because the GC will clean them up automatically when they are unreachable.

- However, a GCObject can be deleted explicitly with the delete operator. Only do this if you know for certain that there are no other references, and you want to help the GC along:

// Optimization: Get rid of myObject now, because we know there are no other // references, so no need to wait for GC to clean it up. delete myObject;

- The destructor for a GCObject will never be called (unless the object is also a descendant of GCFinalizedObject... see below.)

class MyObject : public MMgc::GCObject {

~MyObject() { assert(!"this will never be hit (unless we also descend from GCFinalizedObject)"); }

};

GCObject::GetWeakRef

GCWeakRef *GetWeakRef() const;

The GetWeakRef method returns a weak reference to the object. Normally, a pointer to the object is considered a hard reference -- any such reference will prevent the object from being destroyed. Sometimes, it is desirable to hold a pointer to a GCObject, but to let the object be destroyed if there are no other references. GCWeakRef can be used for this purpose. It has a get method which returns the pointer to the original object, or NULL if that object has already been destroyed.

GCFinalizedObject

Base class: GCObject

A garbage collected object with finalization support.

All of the rules from GCObject above apply to GCFinalizedObject.

The finalizer (C++ destructor) of a GCFinalizedObject will be invoked when MMgc collects the object.

class MyFinalizedObject : public MMgc::GCFinalizedObject

{

public:

~MyFinalizedObject()

{

// Do finalization behavior, like closing network connections,

// freeing unmanaged memory owned by this object, etc.

}

};

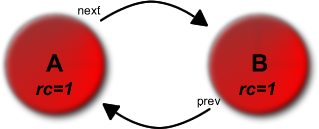

RCObject

Base class: GCFinalizedObject

This is a reference-counted, garbage collected object.

RCObject is used instead of GCObject when more immediate reclamation of memory is desired. For instance, the avmplus::String class in AVM+ is a RCObject. Strings are created very frequently, and are often temporary objects with very short lifetimes. By making them RCObjects, the GC is able to reclaim them much faster and limit memory growth.

All of the rules for GCObject apply to RCObject, and there are a few more:

- Any pointer to a RCObject from unmanaged memory must use the DRC macro.

class MyObject : public MMgc::RCObject { ... };

class MyUnmanagedObject : public MMgc::GCRoot {

DRC(MyObject*) myObject;

};

- Any pointer to a RCObject from managed (GC) memory must use the DRCWB macro. This is true whether the object containing the pointer is GCObject or RCObject.

class MyObject : public MMgc::RCObject { ... };

class MyOtherManagedGCObject : public MMgc::GCObject {

DRCWB(MyObject*) myObject;

};

class MyOtherManagedRCObject : public MMgc::RCObject {

DRCWB(MyObject*) myObject;

};

- The RCObject must zero itself out on deletion. For this reason, RCObject's always have finalizers. Declare a destructor that zeros out all of the fields of your RCObject. See Zeroing RCObjects for more information.

class MyObject : public MMgc::RCObject {

public:

MyObject() { x = 1; y = 2; z = 3; }

~MyObject() { x = y = z = 0; }

private:

int x;

int y;

int z;

};

GCRoot

If you have a pointer to a GCObject from an object in unmanaged memory, the unmanaged object must be a subclass of GCRoot.

GCRoot must be subclassed by any unmanaged memory class that holds GC pointers.

class MyGCObject : public MMgc::GCObject { ... };

class MyGCRoot : public MMgc::GCRoot {

MyGCObject* myGCObject;

};

Note that a GCRoot is NOT a garbage-collected object. It is an unmanaged memory object that contains GC pointers.

MMgc keeps a list of all GCRoots in the system and makes sure that it marks them. GCRoots are generally expected to be allocated using MMgc's unmanaged memory allocators, so that MMgc can figure out how big the GCRoot object is.

Use of GCRoot is required to have GC pointers from unmanaged memory, since without GCRoot, those pointers won't be marked by the mark phase of the GC.

Note that GCRoot can be used either by subclassing, or by creating a GCRoot and passing it the memory locations to treat as a root:

/** subclassing constructor */ GCRoot(GC *gc); /** general constructor */ GCRoot(GC *gc, const void *object, size_t size);

Allocating objects

Allocating unmanaged objects is as simple as using global operator new/delete, the same way you always have.

To allocate a managed (GC) object, you must use the parameterized form of operator new, and pass it a reference to the MMgc::GC object.

class MyObject : public MMgc::GCObject { ... };

...

MyObject* myObject = new (gc) MyObject();

DWB/DRC/DRCWB

There are several smart pointer templates which must be used in your C++ code to work properly with MMgc.

DWB

DWB stands for Declared Write Barrier.

It must be used on a pointer to a GCObject/GCFinalizedObject, when that pointer is a member variable of a class derived from GCObject/GCFinalizedObject/RCObject/RCFinalizedObject.

class MyManagedClass : public MMgc::GCObject

{

// MyManagedClass is a GCObject, and

// avmplus::Hashtable is a GCObject, so use DWB

DWB(avmplus::Hashtable*) myTable;

};

DRC

DRC stands for Deferred Reference Counted.

It must be used on a pointer to a RCObject/RCFinalizedObject, when that pointer is a member variable of a C++ class in unmanaged memory.

class MyUnmanagedClass

{

// MyUnmanagedClass is not a GCObject, and

// avmplus::Stringp is a RCObject, so use DRC

DRC(Stringp) myString;

};

DRCWB

DRCWB stands for Deferred Reference Counted, with Write Barrier.

It must be used on a pointer to a RCObject/RCFinalizedObject, when that pointer is a member variable of a class derived from GCObject/GCFinalizedObject/RCObject/RCFinalizedObject.

class MyManagedClass : public MMgc::GCObject

{

// MyManagedClass is a GCObject, and

// avmplus::Stringp is a RCObject, so use DRCWB

DRCWB(Stringp) myString;

};

When are the macros not needed?

Write barriers are not needed for stack-based local variables, regardless of whether the object pointed to is GCObject, GCFinalizedObject, RCObject or RCFinalizedObject. The GC marks the entire stack during collection, and not incrementally, so write barriers aren't needed.

Write barriers are not needed for pointers to GC objects from unmanaged memory (GCRoot). GCRoots are marked at the end of the mark phase, and not incrementally, so no write barriers are required. DRC() is required for RC objects, since the reference count must be maintained.

Write barriers are not needed for C++ objects that exist purely on the stack, and never in the heap. The Flash Player class "NativeInfo" is a good example. Such objects are essentially the same as stack-based local variables.

Zeroing RCObjects

All RCObjects (including all subclasses) must zero themselves out completely upon destruction. Asserts enforce this. The reason is that our collector zeroes memory upon free and this was hurting performance. Since MMgc must traverse objects to decrement refcounts properly upon destruction, I just made destructors do zeroing too. This mostly applies to non-pointer fields as DRCWB smart pointers do this for you.

Poisoned Memory

In DEBUG builds, MMgc writes "poison" into deallocated memory as a debugging aid. Here's what the different poison values mean:

0xfafafafa |

Uninitialized unmanaged memory |

0xedededed |

Unmanaged memory that was freed explicitly |

0xbabababa |

Managed memory that was freed by the Sweep phase of the garbage collector |

0xcacacaca |

Managed memory that was freed by an explicit call to GC::Free (including DRC reaping) |

0xdeadbeef |

This is written to the 4 bytes just after any object allocated via MMgc. It is used for overwrite detection. |

0xfbfbfbfb |

A block given back to the heap manager is memset to this (fb == free block). |

Finalizers

If your C++ class is a subclass of GCFinalizedObject or RCFinalizedObject, it has finalizer support.

A finalizer is a method which will be invoked by the GC when an unreachable object is about to be destroyed.

It's similar to a destructor. It differs from a destructor in that it is usually called nondeterministically, i.e. in whatever random order the GC decides to destroy objects. C++ destructors are usually invoked in a comparatively predictable order, since they're invoked explicitly by the application code.

In MMgc, the C++ destructor is actually used as the finalizer.

If you don't subclass GCFinalizedObject or RCFinalizedObject, any C++ destructor on your garbage collected class will basically be ignored. Only if you subclass GCFinalizedObject or RCFinalizedObject will MMgc know that you want finalization behavior on your class.

It's best to avoid finalizers if you can, since finalization behavior can be unpredictable and nondeterministic, and also slows down the GC since the finalizers need to be invoked.

Finalizer Access Rules

Finalizers are very restricted in the set of objects they may access. Finalizers may not perform any of the following actions:

- Fire any write barriers

- Dereference a pointer to any GC object, including member variables (except see below about RCObject references)

- Allocate any GC memory (

GC::Alloc), explicitly free GC memory (GC::Free) - Change the set of GC roots (create a GCRoot object or derivative)

- Cause itself to become reachable

If a finalized object holds a reference to an RCObject, it may safely call decrementref on the RCObject.

Threading

The GC routines are not currently thread safe, we're operating under the assumption that none of the player spawned threads create GC'd things. If this isn't true we hope to eliminate other threads from doing this and if we can't do that we will be forced to make our GC thread safe, although we hope we don't have to do that.

Threading gets more complicated because it makes sense to re-write ChunkMalloc and ChunkAllocBase to get their blocks from the GCHeap. They can also take advantage of the 4K boundary to eliminate the 4 byte per allocation penalty.

Troubleshooting MMgc

Dealing with bugs

GC bugs are hard.

Forgetting a write barrier

If you forget to put a write barrier on a pointer, the incremental mark process might miss the pointer being changed. The result will be an object that your code has a pointer to, but which the GC thinks is unreachable. The GC will destroy the object, and later you will crash with a dangling pointer.

When you crash with what looks like a dangling pointer to a GC object, check for missing write barriers in the vicinity.

Forgetting a DRC

If you forget to put a DRC macro on a pointer to an RCObject from unmanaged memory, you can get a dangling pointer. The reference count of the object may go to zero, and the object will be placed in the ZCT. Later, the ZCT will be reaped and the object will be destroyed. But you still have a pointer to it. When you dereference the pointer later, you'll crash with a dangling pointer.

When you crash with what looks like a dangling pointer to a RC object, look at who refers to the object. See if there are missing DRC macros that need to be put in.

Wrong macro

If you put DWB instead of DRCWB, you'll avoid dangling pointer issues from a missing write barrier, but you might hit dangling pointer issues from a zero reference count.

If you crash with a dangling pointer to a RC object, check for DWB macros that need to be DRCWB.

Unmarked unmanaged memory

If you have pointers to GC objects in your unmanaged memory objects, the unmanaged objects need to be GCRoots.

GCRoots are known to the GC and will be marked during a collection. Pointers must be marked for the GC to consider the objects "live"; otherwise, the objects will be considered unreachable and will be destroyed. And you'll be left with dangling pointers to these destroyed objects.

If you get a crash dereferencing a pointer to a GC object, and the pointer was a member variable in an unmanaged (non-GC) object, check whether the unmanaged object is a GCRoot. If it isn't, maybe it needs to be.

Finding missing write barriers

There are some automatic aids in the MMgc library which can help you find missing write barriers. Look in MMgc/GC.cpp.

// before sweeping we check for missing write barriers bool GC::incrementalValidation = false;

// check for missing write barriers at every Alloc bool GC::incrementalValidationPedantic = false;

If you suspect you have missing write barriers, turn these switches on in a DEBUG build. (The second switch will slow your application down a lot more than the first switch, so you could try the first, then the second.)

When a missing write barrier is detected, MMgc will assert and drop you into the debugger, and will print out a message telling you which object contained the missing write barrier, the address of the member variable that needs it, and what object didn't get marked due to the missing write barrier.

Sometimes, this missing write barrier detection will turn up a false positive. If you can't find anything wrong with the code, it might just be a false positive.

Debugging Aids

MMgc has several debugging aids that can be useful in your development work.

Underwrite/overwrite detection

MMgc can often detect when you write outside the boundaries of an object, and will throw an assert in debugging builds when this happens.

Leak detection (for unmanaged memory)

When the application is exiting, MMgc will detect memory leaks in its unmanaged memory allocators and print out the addresses and sizes of the leaked objects, and stack traces if stack traces are enabled.

Stack traces are enabled via the MMGC_MEMORY_PROFILER feature and setting the MMGC_PROFILE environment variable to 1. The MMGC_MEMORY_PROFILER feature is implied by the debugger feature and is always on in DEBUG builds.

Deleted object poisoning and write detection

MMgc will "poison" memory for deleted objects, and will detect if the poison has been written over by the application, which would indicate a write to a deleted object.

Stack traces (walk stack frame and lookup IPs)

When #define MEMORY_INFO is on, MMgc will capture a stack trace for every object allocation. This slows the application down but can be invaluable when debugging. Memory leaks will be displayed with their stack trace of origin.

Sample stack trace:

xmlclass.cpp:391 toplevel.cpp:164 toplevel.cpp:507 interpreter.cpp:1098 interpreter.cpp:20 methodenv.cpp:47

Allocation traces, deletion traces etc.

If you're trying to see why memory is not getting reclaimed; GC::WhosPointingAtMe() can be called from the msvc debugger and will spit out objects that are pointing to the given memory location.

Memory Profiler

MMgc's memory profiler can display the state of your application's heap, showing the different classes of object in memory, along with object counts, byte counts, and percentage of total memory. It can also display stack traces for where every object was allocated. The report can be output to the console or to a file, and can be configured to be displayed pre/post sweep or via API call.

The Memory Profiler use sRTTI and stack traces to get information by location and type:

class avmplus::GrowableBuffer - 24.9% - 3015 kb 514 items, avg 6007b

98.9% - 2983 kb - 512 items - poolobject.cpp:29 abcparser.cpp:948 …

0.8% - 24 kb - 1 items - poolobject.cpp:29 abcparser.cpp:948 …

class avmplus::String - 13.2% - 1602 kb 15675 items, avg 104b

65.6% - 1051 kb - 14397 items - stringobject.cpp:46 avmcore.cpp:2300 …

20.4% - 326 kb - 10439 items - avmcore.cpp:2300 abcparser.cpp:1077 …

6.5% - 103 kb - 3311 items - avmcore.cpp:2300 abcparser.cpp:1077 …

Other Profiling Tools

The gcstats flag on the GC object controls verbose output. In the avmshell this is enable with the -memstats flag. Output looks like this:

[mem] ------- gross stats ----- [mem] private 5877 (23.0M) 100% [mem] mmgc 5792 (22.6M) 98% [mem] unmanaged 13 (52K) 0% [mem] managed 2596 (10.1M) 44% [mem] free 3081 (12.0M) 52% [mem] jit 0 (0K) 0% [mem] other 85 (340K) 1% [mem] bytes (interal fragmentation) 2527 (9.9M) 96% [mem] managed bytes 2520 (9.8M) 97% [mem] unmanaged bytes 7 (28K) 53% [mem] -------- gross stats end -----

Numbers are in pages (with M and K in parens). Private is the number of private committed pages in the process, mmgc is the amount of memory GCHeap has asked for from the OS (not including JIT). FixedMalloc vs. GC allocations are shown in the unmanaged vs. managed split. Free is the amount of memory GCHeap is holding onto that isn't in use by the mutator. Other is the delta between private and mmgc, it includes things like system malloc, stacks, loaded library data areas etc. When this is enable this information is logged everytime we log something interesting. So far something interesting means an incremental mark cycle, a sweep or a DRC reap. They are logged like this:

[mem] sweep(21) reclaimed 910 whole pages (3640 kb) in 22.66 millis (2.4975 s) [mem] mark(1) 0 objects (180866 kb 205162 mb/s) in 0.88 millis (2.5195 s) [mem] DRC reaped 114040 objects (3563 kb) freeing 903 pages (7800 kb) in 17.41 millis (2.0015 s)

How MMgc works

Mark/Sweep

The MMgc garbage collector uses a mark/sweep algorithm. This is one of the most common garbage collection algorithms.

Every object in the system has an associated "mark bit."

A garbage collection is divided into two phases: Mark and Sweep.

In the Mark phase, all of the mark bits are cleared. The garbage collector is aware of "roots", which are starting points from which all "live" application data should be reachable. The collector starts scanning objects, starting at the roots and fanning outwards. For every object it encounters, it sets the mark bit.

When the Mark phase concludes, the Sweep phase begins. In the Sweep phase, every object that wasn't marked in the Mark phase is destroyed and its memory reclaimed. If an object didn't have its mark bit set during the Mark phase, that means it wasn't reachable from the roots anymore, and thus was not reachable from anywhere in the application code.

The following Flash animation illustrates the working of a mark/sweep collector:

(temporarily not working) <gflash>600 300 GC.swf</gflash>

One pass

The mark sweep algorithm described above decomposes into ClearMarks/Mark/Finalize/Sweep. In our original implementation ClearMarks/Finalize/Sweep visited every GC page and every object on that page. 3 passes! Now we have one pass where marks are cleared during sweep so clear marks isn't needed at the start. Also Finalize builds up a lists of pages that need sweeping so sweep doesn't need to visit every page. This has been shown to cut the Finalize/Sweep pause in half (which happens back to back atomically). Overall this wasn't a huge performance increase due to the fact the majority of our time is spent in the Mark phase.

Conservative Collection

MMgc is a conservative mark/sweep collector. Conservative means that it may not reclaim all of the memory that it is possible to reclaim; it will sometimes make a "conservative" decision and not reclaim memory that might've actually been free.

Why make the collector conservative? It simplifies writing C++ application code to run on top of the collector. The alternative to conservative collection is exact collection. To do exact colllection, every C++ class and variable would need to specify exactly which variable contained a GC pointer or not. This is a lot to ask from our C++ developers, so instead, MMgc assumes that every memory location might potentially contain a GC pointer.

That means that it might occasionally turn up a "false positive." A false positive is a memory location that looks like it contains a pointer to a GC object, but it's really just some JPEG image data or an integer variable or some other unrelated data. When the GC encounters a false positive, it has to assume that it MIGHT be a pointer since it doesn't have an exact description of whether that memory is a pointer or not. So, the not-really-pointed-to object will be leaked.

Memory leaks don't sound like an OK thing, right? Well, memory leaks that result from programmer error tend to be bad leaks... leaks that grow over time. With such a leak, you can be pushing hundreds of megabytes of RAM real quick. With a conservative GC, the leaks tend to be random, such that they don't grow over time. The occasional random leak from a false positive can be OK. That doesn't mean we shouldn't worry about it at all, but often conservative GC suffices.

It is possible that a future version of MMgc might do exact marking. This would be needed for a generational collector.

Deferred Reference Counting (DRC)

MMgc uses Deferred Reference Counting (DRC). DRC is a scheme for getting more immediate reclamation of objects, while still achieving high performance and getting the other benefits of garbage collection.

Classic Reference Counting

Previous versions of the Flash Player, up to Flash Player 7, used reference counting to track object lifetimes.

class Object

{

public:

Object() { refCount = 0; }

void AddRef() { refCount++; }

void Release() {

if (!--refCount) delete this;

}

int refCount;

}

Reference counting is a kind of automatic memory management. Reference counting can track relationships between objects, and as long as AddRef and Release are called at the proper times, can reclaim memory from objects that are no longer referenced.

Problem: Circular References

Reference counting falls down when circular references occur in objects. If object A and object B are reference counted and refer to each other, their reference counts will both be nonzero even if no other objects in the system point to them. Locked in this embrace, they will never be destroyed.

This is where garbage collection helps. Mark/sweep garbage collection can detect that these objects containing circular references are really not reachable from anywhere else in the application, and can reclaim them.

The problem with going from reference counting to pure mark/sweep garbage collection is that a lot of time may be spent in the garbage collector. This time will pause the entire application, and give the impression of poor performance. Even with an incremental collector that doesn't have big pauses, a GC sweep only kicks in every so often, so memory usage can grow very quickly to a high peak before the GC collects unused objects.

So, some kind of reference counting is still attractive to lower the amount of work the GC has to do, and to get more immediacy on memory reclamation.

However, reference counting is also slow because the reference counts need to be constantly maintained. So, it's attractive to find some form of reference counting that doesn't require maintaining reference counts for every single reference.

Enter Deferred Reference Counting

In Deferred Reference Counting, a distinction is made between heap and stack references.

Stack references to objects tend to be very temporary in nature. Stack frames come and go very quickly. So, performance can be gained by not performing reference counting on the stack references.

Heap references are different since they can persist for long periods of time. So, in a DRC scheme, we continue to maintain reference counts in heap-based objects. So, reference counts are only maintained to heap-to-heap references.

We basically ignore the stack and registers. They are considered stack memory.

Zero Count Table

Of course, when an object's reference count goes to zero, what happens? If the object was immediately destroyed, that could leave dangling pointers on the stack, since we didn't bump up the object's reference count when stack references were made to it.

To deal with this, there is a mechanism called the Zero Count Table (ZCT).

When an object reaches zero reference count, it is not immediately destroyed; instead, it is put in the ZCT.

When the ZCT is full, it is "reaped" to destroy some objects.

If an object is in the ZCT, it is known that there are no heap references to it. So, there can only be stack references to it. MMgc scans the stack to see if there are any stack references to ZCT objects. Any objects in the ZCT that are NOT found on the stack are deleted.

Incremental Collection

The Flash Player is frequently used for animations and video that must maintain a certain framerate to play properly. Applications are also getting larger and larger and consuming more memory with scripting giving way to full fledged application component models (ala Flex). Unfortunately the flash player suffers a periodic pause (at least every 60 seconds) due to garbage collection requirements that may cause unbounded pauses (the GC pause is proportional to the amount of memory the application is using). One way to avoid this unbounded pause is to break up the work the GC needs to do into "increments".

In order to collect garbage we must trace all the live objects and mark them. This is the part of the GC work that takes the most time and the part that needs to be incrementalized. In order to incrementalize marking it needs to be a process that can be stopped and started. Our marking algorithm is a conservative marking algorithm that makes marking automatic, there are no Mark() methods the GC engine needs to call, marking is simply a tight loop that processes a queue. The way it works is that all GC roots are registered with the GC library and it can mark everything by traversing the roots. At the beginning all the GC roots are pushed onto the work queue. Items on the queue are conservatively marked and unmarked GC pointers discovered while processing each item are pushed on to the queue. When the queue is empty all the marking is complete. Thus the queue itself is a perfect way to maintain marking state between marking increments. This isn't an accident, the GC system was in part designed this way so that it could be easily incrementalized.

The problem then becomes:

- How to account for the fact that the mutator is changing the state of the heap between marking increments

- How much time to spend marking in each increment

Mark consistency

A correct collector never deletes a live object (duh). In order to be correct we must account for a new or unmarked object being stored into an object we've already marked. In implementation terms this means a new or unmarked object is stored in an object that has already been processed by the marking algorithm and is no longer in the queue. Unless we do something we will delete this object and leave a dangling pointer to it in its referent.

There are a couple different techniques for this, but the most popular one based on some research uses a tri-color algorithm with write barriers. Every object has 3 states: black, gray and white.

|

Black means the object has been marked and is no longer in the work queue |

|

Gray means the object is in the work queue but not yet marked |

|

White means the object isn't in the work queue and hasn't been marked |

The first increment will push all the roots on to the queue, thus after this step all the roots are gray and everything else is white. As the queue is processed all live objects go through two steps, from gray to black and white to gray. Whenever a pointer to a white object is written to a black object we have to intercept that write and remember to go back and put the white object in the work queue, that's what a write barrier does. The other scenarios we don't care about:

- Gray written to Black/Gray/White - since the object is gray its on the queue and will be marked before we sweep

- White written to Gray - the white object will be marked as reachable when the gray object is marked

- White written to White - the referant will either eventually become gray if its reachable or not in which case both objects will get marked

- Black written to Black/Gray/White - its black, its already been marked

So a write barrier needs to be inserted anywhere we could possibly store a pointer to a white object into a black object. In practice this means:

- Setting a property on an object to another object (creating an arch in the reachabilty graph)

- Native code that writes a pointer to a GC object into another GC object

- Writing an object to a GC root

1 and 2 are pretty well isolated. 1 is the SetSlot method in the AVM- and some assembly code in the AVM+. #2 can be found by examining all non-const methods of GC objects (and making all fields private, something the AVM+ code base does already). #3 is a little harder because there are a good # of GC roots. This is an unfortunate artifact of the existing code base, the AVM+ is relatively clean and its reachability graph consists of basically 2 GC roots (the AvmCore and URLStreams) but the AVM- has a bunch (currently includes SecurityCallbackData, MovieClipLoader, CameraInstance, FAPPacket, MicrophoneInstance, CSoundChannel, URLRequest, ResponceObject, URLStream and UrlStreamSecurity). In order to make things easier we could avoid WB's for #3 by marking the root set twice. The first increment pushes the root set on to the queue and when the queue is empty we process the root set again, this second root set processing should very fast since the majority of objects should already be marked and the root set is usually small (marked objects are ignored and not pushed on to the work queue). This would mean that developers would only have to take into account #2 really when writing new code.

Illustration of Write Barriers

The following Flash animation demonstrates how a write barrier works.

(temporarily not working) <gflash>600 300 GC2.swf</gflash>

Detecting missing Write Barriers

To make sure that we injected write barriers into all the right places we plan on implementing a debug mode that will search for missing write barriers. The signature of a missing write barrier is a black to white pointer that exists right before we sweep, after the sweep the pointer will point to deleted memory. Also we can check throughout the incremental mark by making sure any black -> white pointers have been recorded by the write barrier. Furthermore we can run this check even more frequently than every mark increment, for instance every time our GC memory allocators request a new block from our primary allocator (the way our extremely helpful greedy collection mode works). This would of course be slow but with good code coverage should be capable of finding all missing write barriers. Only checking for missing write barriers before every sweep will probably be a small enough performance impact to enable it in DEBUG builds. The more frequent ones will have to be turned on manually.

Write Barrier Implementation

There are a couple options for implementing write barriers. At the finest level of granularity everytime a a white object is written to a black object we push the white object onto the work queue (thus making it grey). Another solution is to put the black object on the work queue, thus if multiple writes occur to the black object we only needed one push on the the queue. This could be a significant speedup if the black object was a large array getting populated with a bunch of new objects. On the other hand if the black is a huge array and only a couple slots had new objects written to them we are wasting time by marking the whole thing.

A popular solution to this is what's called card marking. Here you divide memory into "cards" and when a white object is written to a black object you mark the card containing the slot the pointer to the white object was written to. After all marking is done you circle back and remark the black portion of any card that was flagged by the write barrier. There are two techniques to optimize this process. One is to save all the addresses of all pages that had cards marked (so you don't have to bring every page into memory to check its hand, so to speak). Another option is to check every page while doing the normal marking and it any of its cards where flagged handle them immediately since your already reading/writing from that page. The result will be at the end of the mark cycle fewer things need to be marked. These two optimizations can be combined.

Increment Time Slice

Before diving into the this it should be acknowledged that another way to go about this is to use a background thread and not worry about incremental marking. This approach was not chosen for the following reasons:

- Coordinating the marking thread and the main thread will require locking and may suffer due to lock overhead/contention

- Supporting Mac classic's cooperative threads makes this approach harder

- Flash's frame based architecture gives us a very natural place to do this work

- We have better control over how much time is spent marking without threads

When SMP systems become more prevalent it may be worth investigating this approach because true parallelism may afford better performance.

Another point to consider is whether marking should always be on or should be turned on and off at some point based on memory allocation patterns. I think we want the later because:

- All WB's take the fast path when we're not marking, so the more time we spend out of the marking phase the better performance will be overall

- Applications that have low or steady state memory requirements shouldn't suffer any marking penalty

The first thing to determine is when we decide to start marking. Currently we make the decision on when to do a collection based on how much memory has been allocated since the last collection, if its over a certain fraction of the the total heap size we do a collection and if its not we expand. Similarly we can base the decision on when to start marking when we've consumed a certain portion of the heap since the last collection, call this the ISD (incremental start divisor). So if we go with an ISD of 4 we start marking when a quarter of the heap is left and an ISD of 1 means we're always marking.

Now that we know when we start marking there are two conflicting goals to achieve in selecting the marking time slice:

- Maintain the frame rate

- Make sure the collector gets to the sweep stage soon enough to avoid too much heap expansion

If we don't maintain the frame rate the movie will appear to pause and if we don't mark fast enough the mutator could get ahread of the collector and allocate memory so fast that the collection never finishes and memory grows unbounded. The ideal solution will result in only one mark incremental per frame unless the mutator is allocating memory so fast we need to mark more aggressively to get to the sweep. So the frequency of the incremental marking will be based on two factors: the rate at which we can trace memory and the rate at which the mutator is requesting more memory. Study of real world apps will be used to determine how best to factor these to rates together.

GCHeap

The GC library has a tiered memory allocation strategy, consisting of 3 parts:

- A page-granular memory allocator called the

GCHeap - A set of fixed size allocators for sizes up to 2K

- A large allocator for items over 2K

When you want to allocate something we figure out what size class it's in and then ask that allocator for the memory. Each fixed size allocator maintains a doubly linked list of 4K blocks that it obtains from the GCHeap. These 4K blocks are aligned on 4K boundaries so we can easily allocate everything on 8-byte boundaries (a necessary consequence of the 32-bit atom design-- 3 type bits and 29 pointer bits). Also we store the GCAlloc::GCBlock structure at the beginning of the 4K block so each allocation doesn't need a pointer to its block (just zero the lower 12 bits of any GC-allocated thing to get the GCBlock pointer). The GCBlock contains bitmaps for marking and indicating if an item has a destructor that needs to be called (a GCFinalizedObject base class exists defining a virtual destructor for GC items that need it). Deleted items are stored in a per-block free list which is used if there are any otherwise we get the next free item at the end. If we don't have anything free and we reach the end, we get another block from the GCHeap.

GCHeap's reserve/commit strategy

GCHeap reserves 16MB of address space per heap region. The goal of reserving so much address space is so that subsequent expansions of the heap are able to obtain contiguous memory blocks. If we can keep the heap contiguous, that reduces fragmentation and the possibility of many small "Balkanized" heap regions.

Reserving 16MB of space per heap region should not be a big deal in a 2GB address space... it would take a lot of Player instances running simultaneously to exhaust the 2GB address space of the browser process. By allocating contiguous blocks of address space and managing them ourselves, fragmentation of the IE heap may actually be decreased.

On Windows, this uses the VirtualAlloc API to obtain memory. On Mac OS X and Unix, we use mmap. VirtualAlloc and mmap can reserve memory and/or commit memory. Reserved memory is just virtual address space. It consumes the address space of the process but isn't really allocated yet; there are no pages committed to it yet. Memory allocation really occurs when reserved pages are committed. Our strategy in GCHeap is to reserve a fairly large chunk of address space, and then commit pages from it as needed. By doing this, we're more likely to get contiguous regions in memory for our heap.

GCHeap serves up 4K blocks to the size class allocators or groups of contiguous 4K blocks for requests from the large allocator. It maintains a free list and blocks are coalesced with their neighbors when freed. If we use up the 16MB reserved chunk, we reserve another one, contiguously with the previous if possible.

When memory mapping is not available

GCHeap can fall back on a malloc/free approach for obtaining memory if a memory mapping API like VirtualAlloc or mmap is not available. In this case, GCHeap will allocate exactly as much memory as is requested when the heap is expanded and not try to reserve additional memory pages to expand into. GCHeap won't attempt to allocate contiguous regions in this case.

We currently use VirtualAlloc for Windows (supported on all flavors of Windows back to 95), mmap on Mach-O and Linux. On Classic and Carbon, we do not currently use a memory mapping strategy... these implementations are calling MPAllocAligned, which can allocate 4096-byte aligned memory. We could potentially bind to the Mach-O Framework dynamically from Carbon, if the user's system is Mac OS X, and call mmap/munmap.